Anatomy of Modern Ad Fraud Operations

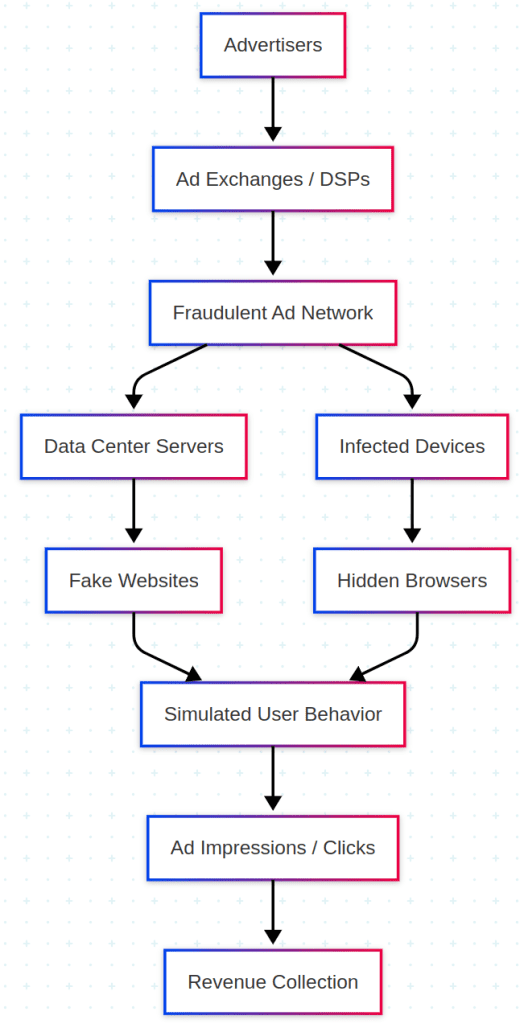

Fraudsters build elaborate infrastructure to impersonate both legitimate publishers and human audiences. A notorious example is Methbot, a Russian-run scheme that rented over 1,900 servers in datacenters and hijacked 571,000+ IP addresses to masquerade as residential users. The Methbot operation spoofed more than 6,000 premium domains, effectively creating fake websites that looked like CNN or ESPN, and then programmed its servers to simulate human behavior – moving a fake mouse cursor, scrolling pages, randomly clicking, and even faking social media logins to appear authentic. This allowed the fake sites to load real video ads in the background and claim credit for up to 300 million ad views per day, defrauding advertisers of an estimated $3–5 million daily.

Another large-scale operation, 3ve, combined multiple offensive tactics. One sub-scheme (3ve.1) deployed bots in data centers similar to Methbot, while another (3ve.2) assembled a botnet of 1.7 million malware-infected PCs globally. These infected computers ran invisible instances of Chrome or Internet Explorer, instructed by a central command-and-control (C2) server to visit the counterfeit websites and load ads in hidden windows. To avoid detection, 3ve’s operators even hijacked blocks of IP addresses via BGP (internet routing) to continually rotate through “clean” IPs – if an IP got flagged as fraudulent, they’d seamlessly replace it. This fast flux of IP addresses and the use of residential IP space helped 3ve appear as millions of legitimate users while insulating the core botnet from takedown.

Fraudsters also leverage mobile devices and malware-infected apps in ad fraud. DrainerBot (exposed by Oracle in 2019) hid in seemingly innocuous Android apps via a corrupted SDK. Once on a user’s phone, the DrainerBot code would silently load invisible video ads in the background (in apps with no visible video player) and report false viewership back to ad networks. A single infected app could consume over 10GB of data per month on fake video ads, draining users’ batteries and data plans. The fraudsters effectively monetized these stealth ads while users remained oblivious. Other schemes have used headless browsers and device emulators – for instance, large “phone farms” running hundreds of Android emulators – to simulate mobile app installs and clicks (click injection fraud), or to mimic human “tap” behaviors on mobile ads. In all cases, the goal is to spoof engagement metrics (impressions, clicks, view-throughs) without any real human in the loop.

To make the fake interactions believable, today’s ad fraud bots use advanced human-like emulation. They randomize their user-agent strings and browser fingerprints, generate realistic mouse movements and timings, produce fake click sequences, and even perform session replay (recreating recorded real user browsing sessions). The 3ve operation, for example, was noted for mimicking human behaviors like mouse movements and random pauses to avoid looking robotic. Some bots can stuff multiple ads into a single real ad slot (hidden via CSS) or rapidly refresh ads, inflating impression counts (pixel stuffing and ad stacking tricks). Fraudsters also scrape content from legitimate sites to populate their spoofed domains with real articles or videos, so that automated scanners see “normal” content around the ads. All of these offensive techniques are designed to defeat basic fraud filters and convince advertisers that genuine users are engaging with ads – when in reality it’s a well-orchestrated illusion.

Detection Methods and Why Fraud Persists

Given the sophistication of these schemes, detecting ad fraud is a constant cat-and-mouse game. Current methods include log analysis, behavioral analytics, machine learning, signature-based filters, and industry standards like ads.txt:

- Log and Traffic Pattern Analysis: Ad platforms and security teams often monitor traffic logs for anomalies – e.g. a surge of clicks at 3 AM from a single IP range, or a campaign with an unusually high click-through rate but no conversions. Such analysis can flag obvious outliers or known bad IPs. However, clever fraud operations blend in. For instance, Methbot’s fake browsers were distributed across hundreds of thousands of IPs and programmed to stay under certain rate limits. Manual log analysis alone may not catch a well-distributed botnet trickling traffic in a “normal-looking” pattern. It’s also inherently reactive (finding fraud after the fact) and labor-intensive given the billions of ad events daily.

- Machine Learning & Anomaly Detection: Many fraud detection tools employ ML models to detect subtle patterns or anomalies across large datasets. These can identify combinations of features (e.g. timestamp, user agent, geolocation, ad placement) that correlate with invalid traffic. Anomaly detection shines in spotting new fraud variants, but it’s only as good as the data fed in and can be thwarted by fraudsters training their bots to conform to expected norms. For example, if normal users watch a video ad for 20–30 seconds, bots can be programmed to do the same. A determined adversary might even A/B test their bots against verification systems (much like 3ve’s operators did) to see what gets through. ML models also risk false positives if legitimate campaigns have unusual but benign patterns, and they require continuous retraining as fraud tactics evolve.

- Signature and Rule-Based Filtering: Basic defenses use blocklists of known bad IP addresses, data center ASN ranges, or flagged device IDs, as well as heuristic rules (e.g. block any traffic with an impossible combo of device attributes). These signature-based approaches can swiftly stop known bots (for instance, blocking a data center IP that should never serve mobile app traffic). The limitation is that sophisticated fraud rings rapidly mutate – Methbot leased fresh IPs and forged new domain names constantly, rendering simple blocklists ineffective. In the 3ve case, blacklisting IPs alone was futile because the operation could “recycle” IP space and infected machines at will. Hard-coded rules also struggle with scale and maintenance, as the rules need continuous updating to cover new fraud patterns.

- Ads.txt / App-ads.txt Enforcement: The IAB Tech Lab’s ads.txt (for web) and app-ads.txt (for mobile apps) are industry measures to prevent domain spoofing. Publishers post a public file listing which ad networks are authorized to sell their inventory. In theory, this stops a fake site from pretending to be, say, nytimes.com in ad exchanges, because exchanges can check the real nytimes.com/ads.txt and see no mention of the fake seller. In practice, ads.txt has helped reduce blatant spoofing, but it’s not a silver bullet. Its coverage is incomplete – not all publishers or exchanges strictly enforce it – and fraudsters find loopholes (e.g. compromising smaller publishers’ sites and inserting fraudulent ads there, which still have valid ads.txt entries). Also, ads.txt doesn’t tackle malware-driven fraud or bots that legitimize themselves through real publishers. Enforcement varies, and some advertisers still unknowingly buy from unauthorized resellers when enforcement is lax. Essentially, ads.txt is necessary for transparency but not sufficient to catch more insidious forms of IVT (Invalid Traffic).

Given these challenges, a number of commercial verification vendors have emerged to assist in detection. Companies like Human (White Ops), DoubleVerify, and Integral Ad Science (IAS) offer specialized fraud detection as a service. Each has a slightly different focus and approach.

Despite these layers of defense, detection has notable blind spots. A recent investigation by Adalytics highlighted that even top verification companies sometimes fail to filter out blatant bot traffic. In tests, bots that openly identified themselves (carrying tell-tale markers of automation) were still able to receive ads, slipping past filters that should have blocked them. In one example, IAS’s system erroneously treated 77% of known bot visits as legitimate, due to mislabeling in certain optimized settings. The root issue is often information asymmetry – verification scripts running in a browser may not have full access to device and network identifiers that the ad platforms see, and vice versa. Moreover, fraudsters continuously refine their methods: when detection vendors block one vector (say, data center IPs), the adversaries pivot to another (residential proxies or malware on user devices). The result is that some fraud inevitably slips through the cracks of each method. As Laura Edelson of Northeastern University aptly put it, distinguishing a real user from “a person-shaped sock puppet holding a sign saying ‘I am a sock puppet’” should be easy, yet bots still fool the system. This cat-and-mouse dynamic keeps the arms race alive between fraudsters and fraud detectors.

The Economics: Fraud Revenue Models vs. Costs

Digital ad fraud isn’t just a technical issue—it’s driven by significant financial incentives. Sophisticated operations are run like startups (albeit criminal ones), with careful ROI calculations. Let’s break down the fraud business model:

On the revenue side, fraudsters typically get paid by selling ad impressions or clicks that appear legitimate. They might pose as a publisher in ad exchanges, collecting payments for every 1,000 fake impressions served, or as an affiliate getting paid per click or install. For example, the Methbot crew created a fake ad network and charged advertisers for billions of video ad views on their spoofed sites. Over 2+ years, they billed at least $7 million in ads that no real person ever saw (law enforcement estimates of the full fraud ranged much higher, up to $180 million industry-wide loss). Similarly, 3ve’s botnet-based scheme generated about $29 million in fraudulent ad payments before it was dismantled.

Meanwhile, the costs for fraudsters mostly involve infrastructure and development. This includes renting servers or cloud instances (or buying stolen server time), purchasing or leasing IP address blocks, developing or acquiring malware, and sometimes paying “install networks” to distribute their malicious app or code. In the Methbot case, the perpetrators leased 650,000+ IP addresses from registrars and ISPs, and used shell companies to register them under fake residential ISP names. They also paid for high-powered servers in U.S. datacenters (over a thousand servers, likely costing tens of thousands of dollars per month). These expenses are significant but dwarfed by the fraud revenue – e.g., renting 1,900 servers might cost on the order of $1–2 million/year, which is trivial if the operation is making $3–5 million per day as White Ops reported. The profit margins can be enormous, which is why organized crime has gravitated toward ad fraud; by some accounts, it became second only to the drug trade in profitability around 2020.

Different schemes have different cost structures. Botnets (like 3ve.2) require developing malware and maintaining C2 infrastructure, but once the malware spreads virally or via exploit kits, adding each new infected PC is low-cost. The fraudsters essentially parasitize victims’ computers and internet connections for free. In contrast, app-based fraud (like DrainerBot) might involve paying developers or tricking them into including a malicious SDK, or outright buying an existing app with a large user base to update it with ad fraud capabilities. There is also a black market for install farms – for instance, paying a network of hackers to silently push your fraudulent app onto thousands of devices. However, even these costs (malware dev, hacker commissions) are offset by the continuous ad revenue from each infected device streaming ads 24/7.

From a higher vantage, the scale of global ad fraud revenue is staggering and growing every year. Estimates compiled by industry researchers and agencies show an alarming trend:

- In 2021, digital ad fraud worldwide was estimated around $65 billion USD in value – equivalent to half of U.S. annual digital ad spend at that time.

- By 2023, global losses to ad fraud jumped to roughly $84–88 billion (about 22% of total digital ad spending that year).

- Forecasts by Statista and Juniper Research anticipate fraud will cost $100+ billion in the next year or two, and as much as $170–172 billion by 2028. That would represent over 23% of global ad spend by 2028, meaning nearly one in four ad dollars could be siphoned by fraud if trends continue.

Global digital ad fraud costs have skyrocketed from an estimated ~$35 billion in 2018 to ~$88 billion in 2023, and are projected to reach ~$172 billion by 2028. This trajectory underscores the rapid growth of the fraud economy.

These eye-popping figures explain why adversaries keep investing in new schemes. Even if an operation only survives for a few months before being caught, it can easily earn back its costs many times over. For instance, 3ve in total made around $36 million from 2014–2018 – how many traditional cyber crimes net that kind of money? The fraud economy also benefits from a low risk of immediate consequences. Many fraudsters operate from jurisdictions with limited extradition (e.g. Methbot’s alleged boss was in Russia). The chance of a coordinated takedown (which involves international law enforcement and industry cooperation) is relatively low compared to the number of active fraud operations. Thus, from an attacker’s ROI perspective: spend a few million on bots and servers, potentially earn tens of millions in ad revenue – a compelling criminal business model.

Regulatory and Industry Response: Governance Gaps and Accountability

From a strategic perspective, the battle against ad fraud is not just technical but also regulatory. The landscape today is a patchwork of self-regulation, general fraud laws, and emerging rules that only partially address the problem:

- Data Privacy Laws (GDPR, CCPA): Privacy regulations like the EU’s GDPR and California’s CCPA indirectly affect ad fraud by constraining data collection. These laws don’t target ad fraud specifically, but by limiting user tracking and mandating consent, they can cut off some methods fraudsters use (for example, fingerprinting users or syncing cookies across sites becomes harder). However, privacy laws can also hamper defenders’ visibility – e.g. fewer user identifiers might make it slightly harder to differentiate real users from bots if everyone is anonymous. Overall, GDPR/CCPA have made the digital ad ecosystem more transparent and consent-driven, which may help reduce certain malicious ad injection practices, but ad fraud per se isn’t the focus of these laws.

- Fraud and Cybercrime Laws: In the U.S., there is no ad-fraud-specific statute, but activities like Methbot and 3ve clearly violate laws against wire fraud, computer intrusion (hacking), and money laundering. The U.S. Department of Justice has successfully prosecuted some ad fraud rings using these laws – for example, Aleksandr Zhukov (Methbot’s “kingpin”) was convicted of multiple counts including wire fraud and money laundering, earning him a 10-year prison sentence. Similarly, members of the 3ve scheme were indicted for crimes like computer intrusion and aggravated identity theft (for stealing IP ranges and simulating users). These prosecutions send a message, but they are few relative to the number of fraudsters out there. Law enforcement faces jurisdictional issues – many perpetrators operate overseas – and must prioritize cases with the highest damages. Outside the U.S., some countries have started to recognize digital ad fraud as a form of organized crime or cybercrime. For instance, authorities in Europe collaborated on the 3ve takedown in 2018, and there’s increasing talk in the EU about treating ad impression fraud under laws against online financial fraud. Still, consistent enforcement is lacking. Victims (advertisers) often eat the losses quietly, and perpetrators often face minimal legal risk unless their activities are egregious enough to attract an international operation.

- Industry Standards and Self-Governance: The digital advertising industry has developed voluntary initiatives to curb fraud. We mentioned ads.txt and app-ads.txt as transparency mechanisms. There’s also TAG (Trustworthy Accountability Group), an industry body that certifies companies as adhering to anti-fraud standards. TAG’s “Certified Against Fraud” program requires publishers, ad tech vendors, and agencies to undergo independent audits and implement processes to reduce invalid traffic. Many major ad exchanges and demand-side platforms are TAG-certified, which has helped cut down some fraud (TAG claims significant reductions in fraud exposure for certified channels). The IAB Tech Lab continues to push new standards like sellers.json (which lets DSPs see the ownership of ad inventory supply paths) and the OpenRTB SupplyChain object, which tracks the sequence of resellers in a bid request. These standards aim to shine light into the opaque corners where fraud hides – e.g. a fake site can’t easily misrepresent itself if every hop in the supply chain is transparent. The challenge is that standards are only effective if widely adopted and accurately implemented. As long as some ad buyers and sellers don’t rigorously check ads.txt or verify supply chain objects, fraudsters will route through those weak links. Self-regulation has made progress but hasn’t eliminated the fundamental economic incentives to cheat.

- Platform Accountability (Google, Facebook, etc.): The big ad platforms publicly report their efforts against fraud. Google, for instance, releases an annual Ads Safety Report detailing how many billions of bad ads, fake accounts, and fraudulent ads it blocked. In 2022, Google reported blocking 212 million ads for violating its misrepresentation and ad fraud policies. They also issue credits to advertisers for invalid traffic detected after the fact, and partner in takedowns (Google was a key player in uncovering 3ve alongside White Ops). Facebook (Meta) similarly has anti-fraud systems for its ad network and has sued click farm operators in the past. Yet, gaps remain: a lot of ad spend flows outside the walled gardens, into the long tail of ad exchanges and networks where enforcement is less strict. Even within major platforms, transparency is a concern – advertisers often have to trust Google or Facebook’s internal metrics about how much fraud was filtered. This has led to some high-profile disputes; for example, Uber sued multiple ad networks in 2017, alleging they took Uber’s ad dollars but delivered bogus app installs via fraud (the case revealed that even well-known ad partners were implicated in allowing junk traffic). Major brands have pushed for independent audits of platform ad inventories to verify they aren’t riddled with IVT. The trust but verify principle is gaining traction, but not all platforms are equally open.

- Emerging Regulations (DSA, etc.): In the EU, the new Digital Services Act (DSA), effective 2024, imposes greater responsibility on large online platforms and marketplaces to police systemic risks – which could be interpreted to include ad fraud and manipulation. The DSA requires “very large online platforms” to assess and mitigate risks such as disinformation and deception. If ad fraud on a platform is found to be harming businesses or consumers, the platform could be forced to adjust its systems or face penalties. Additionally, regulatory bodies like the U.S. Federal Trade Commission (FTC) have signaled interest in digital advertising integrity. The FTC’s focus has traditionally been on consumer protection (e.g. going after fake endorsements or deceptive advertising content), but as ad budgets lost to fraud ultimately harm consumers (higher product prices, etc.), one could imagine future rules or guidance specifically addressing advertising fraud practices. So far, though, regulation lags behind the problem – we have broad strokes laws against fraud, but no detailed regulatory framework that an ad tech company must follow to combat IVT beyond industry best practices.

The net effect of the current governance landscape is that accountability is improving but still porous. Industry collaboration has achieved notable wins (Methbot, 3ve, and more recent CTV fraud rings have been busted through joint efforts), and standards have closed off easy loopholes like simple domain spoofing. But fraudsters adapt to the rules of the game, often operating just outside the reach of any single jurisdiction or exploiting the trust between various ad tech intermediaries. No single regulator or company sees the full picture, which is exactly what fraud networks prey upon.

One proposal on the horizon is for more public-private partnerships and information sharing hubs focused on ad fraud (similar to how banks share data on money laundering). The ad industry’s “Human Collective” initiative by HUMAN Security is one example, where ad platforms and brands share threat intel to collectively shield against identified botnets. Over time, we may see stronger legal mandates for ad supply chain transparency and penalties for willful negligence (for instance, if an ad exchange consistently fails to vet traffic sources, they could be held liable). Until then, advertisers and platforms must navigate a landscape where due diligence and voluntary measures are crucial to stay ahead of fraud.

Role-Based Insights: What CEOs, CMOs, CISOs, and Security Teams Should Know

Digital ad fraud sits at the intersection of marketing and security. Different stakeholders in an organization need tailored insights to address this challenge effectively. Below is an action checklist for key roles:

CEO – Strategic & Financial Oversight

- Big Picture Impact: Understand that ad fraud directly erodes marketing ROI and can cost your business millions in wasted spend. It’s not just a “marketing problem” – it reflects operational risk and potentially even stock-impacting waste (tens of billions lost across industries). Ensure it’s discussed in board meetings as part of risk management, similar to cybersecurity breaches.

- Accountability: Ask for transparency from your marketing and ad agencies on how they are tackling fraud. Set KPIs that include validated human reach, not just raw impressions or clicks. This drives teams to prioritize quality of traffic over vanity metrics.

- Resource Allocation: Be willing to invest in anti-fraud measures (tools or audits). The cost of prevention (such as third-party verification services or in-house data scientists analyzing traffic) is often a fraction of the losses prevented. For instance, saving even 10% of a $10M ad budget from fraud is a $1M direct return.

- Cross-Functional Empowerment: Champion a collaborative approach – direct your CISO and CMO to work together. Ad fraud often falls between the cracks; by explicitly assigning joint responsibility, you ensure security expertise is applied to marketing’s blind spots.

CMO – Marketing Effectiveness and Brand Protection

- Budget Efficacy: Recognize that a portion of your ad spend is inevitably being siphoned by bots. Global rates hover around 15-20% of digital spend – benchmark your own campaigns. Insist on fraud-adjusted performance metrics from your team and agencies (e.g. report human-only impressions, adjusted CPA after filtering fraud).

- Demand Transparency: Use your leverage with ad platforms and agencies to get detailed placement reports. Utilize industry tools: ensure all your media buys are on ads.txt-authorized inventory and ask for TAG Certified partners. If a network or publisher can’t demonstrate anti-fraud compliance, reconsider investing there.

- Verification Tools: Incorporate at least one third-party verification service (IAS, DV, HUMAN, etc.) in all campaigns. These can operate as a “second set of eyes” to catch fraud that the primary platform might miss. Set up pre-bid filters and post-bid monitoring – for example, block known fraud categories (data-center traffic, known IVT bots) in real time, and have daily reports on suspicious activity.

- Brand Safety Angle: Remember that fraud can hurt your brand indirectly. Your ads might end up on shady or fake websites that tarnish brand image or, worse, malware-laden apps (as seen with DrainerBot). By fighting fraud, you also improve brand safety. Communicate this dual benefit to get support for anti-fraud initiatives.

CISO – Security, Threat Intelligence, and Compliance

- Ad Fraud as Cyber Threat: Treat major ad fraud schemes as you would other cyberattacks – they involve bots, malware, and threat actors. Plug into threat intel feeds from ad fraud investigations (many overlap with botnet and malware intel). For instance, if millions of consumer devices are infected with an ad-fraud botnet, that’s a security threat to potentially your customers or even your corporate systems.

- Protect Company Assets: Ensure your company’s digital assets aren’t being spoofed in ads. Implement ads.txt on your web properties if you sell any ad inventory. If your company has a mobile app with ads, use app-ads.txt. This prevents fraudsters from impersonating your domains to steal ad revenue or run malvertising in your name.

- Data Sharing: Collaborate with the marketing analytics team to access ad traffic logs and patterns. Security analytics can often spot anomalies (e.g. a flood of hits from known botnet IPs) that marketing might overlook. By correlating marketing data with firewall or network logs, you might identify a fraud issue faster together. Some security teams set up joint dashboards with marketing showing real-time IVT levels.

- Regulatory Compliance: Advise the business on any legal exposure related to ad fraud. For example, if fraudulent traffic artificially inflated your user metrics that were reported to investors or used in financial decisions, there could be compliance implications. While not directly a CISO’s domain, it falls under data integrity – ensure that internal audit or legal is aware of how ad fraud is handled so that stakeholder reporting remains accurate.

Security & Analytics Teams – Operational Defense and Monitoring

- Implement Technical Controls: Deploy scripts or services that can perform bot detection on your web traffic (if you host ads or landing pages). For instance, use CAPTCHA or bot defense on pages where ad-driven traffic flows in, to gauge what portion of visitors are non-human. Share these findings with marketing – if a campaign is sending 50% bot clicks to your site, that’s actionable data.

- Fraud Incident Response: Incorporate ad fraud scenarios into your incident response plan. If marketing reports a sudden spike in traffic that looks fishy, treat it like a security incident: preserve data, analyze the sources (IPs, user agents), see if it maps to known botnets or fraud signatures. Engage your threat intel resources – often fraud networks reuse infrastructure from other cyber crimes.

- Analytics and Machine Learning: Leverage your data science skills to build internal models spotting fraud. For example, cluster conversion events by attributes – genuine users might show diversity in behavior, whereas fake conversion events might cluster on certain IP ranges or exhibit the same session length. Even simple anomaly scripts (z-score outliers on click rates, etc.) can augment the commercial tools. Feed this back into campaign optimization in near-real-time.

- Continuous Education and Updates: Stay updated on the latest fraud schemes (via webinars, security conferences, the aforementioned industry reports). The tactics evolve quickly – e.g. the rise of CTV ad fraud on smart TVs, or bots that defeat CAPTCHA. Ensure your team refreshes detection playbooks accordingly. It can also be valuable to periodically brief the marketing team on these developments in layman’s terms, to raise overall vigilance.

Conclusion

Digital ad fraud has matured into a complex, global adversary – one that blends the creativity of marketers with the cunning of hackers. We’ve pulled back the curtain on how these schemes are structured: from datacenter bot farms clicking on ads in bulk, to botnets of everyday devices silently loading ads in hidden windows, to rogue mobile SDKs that convert user phones into revenue-generating zombies. We’ve also seen that while detection technologies are improving, there is no single magic bullet. Each defensive layer, whether behavioral analytics or industry transparency initiatives, addresses part of the problem yet leaves other gaps that fraudsters exploit.

For businesses investing heavily in digital advertising, the takeaway is clear: proactive, multi-faceted defense is essential. This means not only relying on your ad platforms to handle it, but taking an active role – use independent verification, demand transparency, and involve your security experts. It’s a team sport: marketing, security, and even finance leaders must coordinate to ensure that the company’s ad spend is actually reaching real human audiences. The cost of complacency is evident in the ever-growing fraud loss numbers, but so is the payoff of vigilance – even modest improvements in fraud prevention can recapture significant budget and drive higher real ROI.

Finally, as an industry and regulatory matter, momentum is building to choke off ad fraud’s oxygen. Collaborative takedowns and new standards are pushing fraudsters into tighter corners. Still, like any sophisticated criminal enterprise, they will continue probing for weakness. By understanding the enemy’s playbook – their infrastructure, techniques, and economics – we can anticipate their moves and fortify our defenses accordingly. In doing so, we not only protect our own organizations’ interests, but also contribute to the long-term integrity and sustainability of the digital advertising ecosystem. The fight against ad fraud is far from over, but with technical rigor and strategic alignment, it’s a fight we can increasingly tip in our favor.

At GWRX Group, we specialize in assessing, mitigating, and securing enterprise environments against modern cyber threats. If you seek to validate your security posture or require assistance in implementing these defenses, contact our experts today.

📢 Stay ahead of cyber threats—protect your data, secure your network, and fortify your business!

Schedule a security assessment today here!

References

Business of Apps.

Ad fraud statistics (2025)

🔗 https://www.businessofapps.com/ads/ad-fraud/research/ad-fraud-statistics/

Juniper Research.

Quantifying the cost of ad fraud: 2023–2028 (Whitepaper)

🔗 https://fraudblocker.com/wp-content/uploads/2023/09/Ad-Fraud-Whitepaper_Juniper-Research.pdf

AdExchanger. (2025).

Adalytics: The ad industry’s bot problem is worse than we thought

🔗 https://www.adexchanger.com/platforms/adalytics-the-ad-industrys-bot-problem-is-worse-than-we-thought/

Integral Ad Science. (2025).

IAS’s response to the Adalytics report

🔗 https://integralads.com/insider/ias-response-adalytics-report/

Axis Mobi. (n.d.).

Fraud detection and prevention: Successful case studies

🔗 https://www.axismobi.com/blog/fraud-detection-and-prevention-case-studies/

HUMAN Security.

Methbot: Then and now

🔗 https://www.humansecurity.com/learn/blog/methbot-then-and-now

Paladin Capital Group.

White Ops exposes largest and most profitable ad fraud bot operation

🔗 https://www.paladincapgroup.com/white-ops-exposes-largest-and-most-profitable-ad-fraud-bot-operation/

Krebs on Security.

Report: $3-5M in ad fraud daily from ‘Methbot’

🔗 https://krebsonsecurity.com/2016/12/report-3-5m-in-ad-fraud-daily-from-methbot/

HUMAN Security.

3ve: Massive online ad fraud operation (PSA)

🔗 https://www.humansecurity.com/learn/resources/3ve-massive-online-ad-fraud-operation-psa

Cybersecurity and Infrastructure Security Agency.

3ve – Major online ad fraud operation

🔗 https://www.cisa.gov/news-events/alerts/2018/11/27/3ve-major-online-ad-fraud-operation

Axios.

Authorities stop giant digital ad fraud scheme

🔗 https://www.axios.com/2018/11/28/authorities-stop-giant-digital-ad-fraud-scheme

Oracle.

Oracle exposes “DrainerBot” mobile ad fraud operation

🔗 https://www.oracle.com/corporate/pressrelease/mobilebot-fraud-operation-022019.html

Integral Ad Science.

DrainerBot fraud update: Full mobile fraud protection for IAS clients

🔗 https://integralads.com/news/drainerbot-fraud-update-full-mobile-fraud-protection-ias-clients/

Juniper Research.

The current digital advertising fraud landscape

🔗 https://www.juniperresearch.com/resources/blog/the-current-digital-advertising-fraud-landscape/

U.S. Department of Justice.

Russian cybercriminal sentenced to 10 years in prison for digital advertising fraud scheme

🔗 https://www.justice.gov/usao-edny/pr/russian-cybercriminal-sentenced-10-years-prison-digital-advertising-fraud-scheme

U.S. Department of Justice.

Two international cybercriminal rings dismantled and eight defendants indicted, causing tens of millions of dollars in losses to U.S. advertising industry

🔗 https://www.justice.gov/usao-edny/pr/two-international-cybercriminal-rings-dismantled-and-eight-defendants-indicted-causing

IAB Tech Lab.

Authorized digital sellers (ads.txt)

🔗 https://iabtechlab.com/ads-txt/

Trustworthy Accountability Group.

TAG launches anti-fraud certification program

🔗 https://www.tagtoday.net/pressreleases/tag-launches-anti-fraud-certification-program

IAB Tech Lab.

Sellers.json

🔗 https://iabtechlab.com/sellers-json/

IAB Tech Lab.

Supply chain & foundations

🔗 https://iabtechlab.com/standards/supply-chain-foundations/

Edelson, L.

When social trust is the attack

🔗 https://medium.com/cybersecurity-for-democracy/when-social-trust-is-the-attack-f18262a4cb35

HUMAN Security.

Introducing the Human Collective

🔗 https://www.humansecurity.com/learn/blog/introducing-the-human-collective/

Google and White Ops.

The Hunt for 3ve